Boltzmann Distribution, Introduction to Partition Functions

Review: practice on your own

What is maximum value of entropy that a discrete, -state system can have? What is the minimum? That is, if I have a system with possible states and the probabilities associated with each of those states are , what values of yield the largest values of ? The smallest?

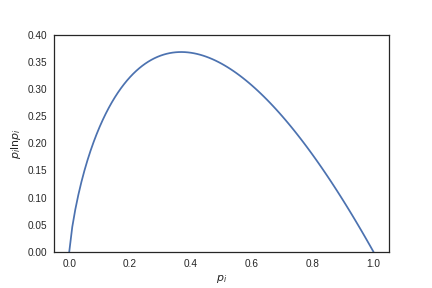

Let’s start with the minimum. Plotting the function gives the following:

The smallest possible value for entropy is 0. This occurs at and . That means that we could build up a distribution that looks like and the entropy of that distribution would be zero.

The only reason that sort of intuition worked is because we got lucky: the two values and can easily be combined to obtain a normalized distribution. You couldn’t, however, just “eyeball” the maximum value of entropy here. Why? Because the maximum of the curve occurs at -ish, and you couldn’t just make a distribution . It’s not normalized!

So we have to get at the max through Lagrange multipliers.

Taking partials, we find

That means every single is equal to the same constant!

The maximum entropy distribution (with no constraints!!) is a uniform distribution. On the other hand, we found that the maxEnt distribution for known mean and variance was the normal distribution.

Maximizing multiplicities subject to constraints

In physical systems, we often only know about ensemble average quantities (i.e., thermodynamic variables) rather than details of the particular microstates .

This is analagous to rolling a die and only knowing the average value of the rolls, rather than the values of each individual roll.

So anyway, if we only know the average values of a probability distribution, but not the distribution itself, what underlying probability distribution should we assume? If we only know the average energy of a system, what probability distribution should we assign to the energy?

Well, we know that systems in equilibrium are in states of maximumum multiplicity, and we found last lecture that maximizing multiplicity corresponds to maximizing entropy. So we have to solve the following constrained optimization problem:

- where .

Plug and chug with Lagrange multipliers:

Partition functions: the most important thing in statistical mechaincs

The denominator (normalization factor) above is called the “partition function”.

It gets its own name because it’s so critically important.

We’ll find, as we dive into thermodynamics, that it’s really messy and challenging to relate multiple thermodynamic variables to one another. The partition function is amazing and almost magical in the that you can use just that quantity to get basically all the thermodynamic variables you could possibly want. That may seem opaque now, which is ok. We have to do thermodynamics before it “clicks”.

*Example: Four bead polymer.* Suppose we have a four bead polymer that can be in one of four five conformations. One conformation is a “closed” state with energy , and the other four are “open states” with each having energy . Let’s find the probabilities of being in the open or closed state at high and low temperatures.

We can write down the partition function immediately:

The probabilities are simply the Boltzmann factors divided by their normalization (the partition function!)

Let’s take the low temperature limit :

At low temperatures, energy effects dominate. We favor the ground state of the system.

Now the high temperature limit:

At low temperatures, entropic effects dominate. We favor the most uniform distribution of states!

Practice on your own

Get started on the homework!